MEVID Person Re-Identification Data

- An additional 289 clips (approximately 24 hours) of previously unreleased MEVA video

- 158 identities, with an average of four outfits per identity

- 33 viewpoints

- 17 locations

- over 1.7M bounding boxes

- over 10.46M frames

Obtaining MEVID

Instructions for downloading MEVID annotations and supporting video may be found on https://github.com/Kitware/mevid.

Citing MEVID

The dataset is described in our paper, MEVID: Multi-view Extended Videos with Identities for Video Person Re-Identification, due to appear in WACV 2023. The bibtex citation is:

@InProceedings{Davila_MEVID_2023,

author = {Davila, Daniel and Du, Dawei and Lewis, Bryon and Funk, Christopher and Van Pelt, Joseph and Collins, Roderic and Corona, Kellie and Brown, Matt and McCloskey, Scott and Hoogs, Anthony and Clipp, Brian},

title = {MEVID: Multi-view Extended Videos with Identities for Video Person Re-Identification},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023}

}

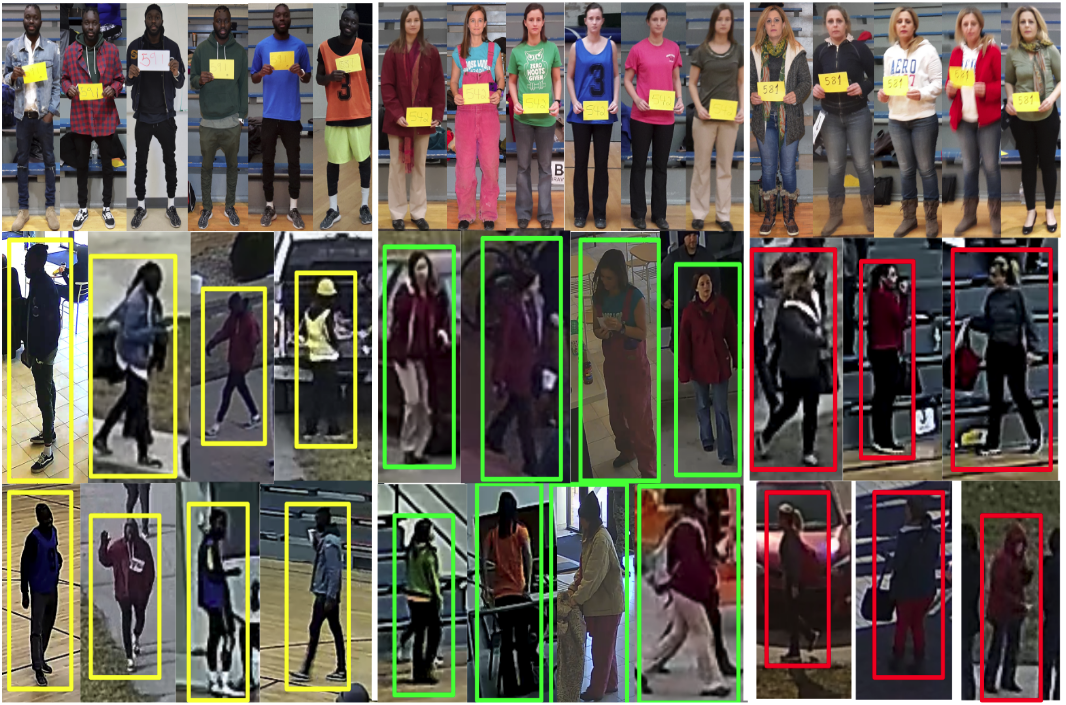

Actor checkin photos (top row) are associated with tracklets from MEVA videos (middle and bottom rows) to create global IDs.